Infinitely better.

100% of the reads removed. Roughly 4000 (okay, 3890), down to zero.

None.

Let me explain…

Obviously if there’s data being returned, there are reads needed. And obviously there is some CPU needed for SQL to be given a query and do anything at all. Luckily for me, performance of a query is typically evaluated using the number of reads performed. That’s what I’m looking at.

Recently I came across a query that typically returns no rows. It was looking for rows in a table that met a particular condition, joined those rows to a bunch of other tables, and return the result. But yeah, typically no rows returned. Estimating a few, but none actually coming out.

Consider the following query, on AdventureWorks.

|

1 2 3 4 5 6 |

SELECT * FROM Sales.SalesOrderDetail as od JOIN Production.Product as p on p.ProductID = od.ProductID JOIN Production.ProductModel as m on m.ProductModelID = p.ProductModelID LEFT JOIN Production.ProductSubcategory as s on s.ProductSubcategoryID = p.ProductSubcategoryID where od.OrderQty > 1200; |

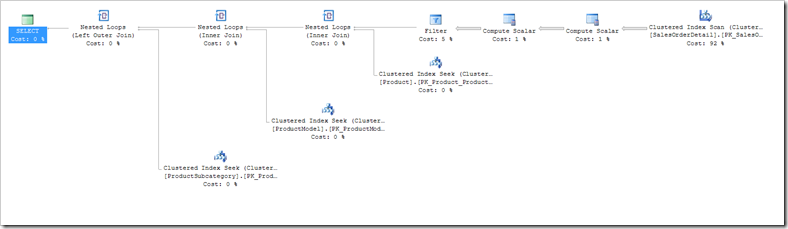

Running this query gives me no rows back, but well over a thousand reads.

As well as this, it suggested a missing index.

|

1 2 3 |

CREATE NONCLUSTERED INDEX OrderQtyIndex ON [Sales].[SalesOrderDetail] ([OrderQty]) INCLUDE ([SalesOrderID], [SalesOrderDetailID], [CarrierTrackingNumber], [ProductID], [SpecialOfferID], [UnitPrice], [UnitPriceDiscount], [LineTotal], [rowguid], [ModifiedDate]); |

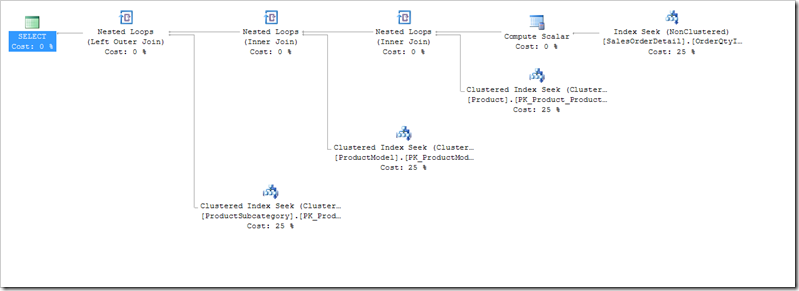

Creating this index does indeed reduce the reads. A lot too! Instead of scanning the Sales.SalesOrderDetail table, it can use an Index and quickly find any rows that have more than 1200 items sold.

This is good. But is it worth stopping here?

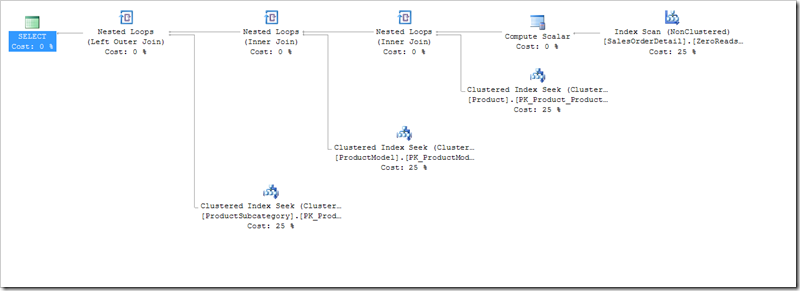

The index that I created gives a slightly different plan. It doesn’t perform a Seek on an index on the Sales.SalesOrderDetail table, it does a Scan! But zero reads.

The difference is a filter.

|

1 2 3 4 |

CREATE NONCLUSTERED INDEX ZeroReadsIndex ON [Sales].[SalesOrderDetail] ([OrderQty]) INCLUDE ([SalesOrderID], [SalesOrderDetailID], [CarrierTrackingNumber], [ProductID], [SpecialOfferID], [UnitPrice], [UnitPriceDiscount], [LineTotal], [rowguid], [ModifiedDate]) WHERE OrderQty > 1200; |

Now, we have a completely empty index. Scanning this index is zero reads. It’s just like having a table with no rows in it. This index gets only populated with a row whenever a qualifying row appears in the underlying table. When that happens, there’s few reads required to be able to get the necessary data out of the other tables. But for 99.9% of the time this query is run, there are now NO READS. For the real scenario, that row gets picked up and removed quite quickly, returning the query to the ‘zero reads’ scenario.

Do you have frequently-run queries that typically return no rows, because there’s a predicate that is rarely satisfied? How about turning that predicate into an index filter, and seeing if you can reduce a big chunk of the footprint?

This Post Has 15 Comments

Hi Rob, thanks for this, good post. Filtered indexes aren’t something I use at all at the moment so a great reminder to look into it!

Rob,

Thanks for the post. I’ve played around with filtered indexes in the sandbox but don’t have any in production. I’ll keep this use case scenario in mind.

Cheers,

Andre Ranieri

Andre – they’re useful even if they don’t filter out everything. If you have a common filter such as "IsActive = 1", then a filtered index can be really useful then too. It’ll shrink the number of rows that appear in the index, reducing the footprint and decreasing the reads accordingly. Imagine you’re sorting rows by date, but only interested in those that are Active. Without the filter, you’re keeping all the Inactive rows in the index, even though you’re not interested in them.

Rob – the lightbulb just turned on. That’s a use case I run into fairly often.

Thanks again for the great blog.

Andre

I’ve found filtered indexes useful when the query uses defined subsets and consistent search criteria.

Filtered statistics is another technique to consider. I have some scenarios on http://www.sqlserver-dba.com/2011/07/filtered-statistics-t-sql-best-practises-for-t-sql-tuesday.html

Based on your other blog entry… is there potential for a filtered index like this to "break" queries, by changing the data they return? If you do 80% of your queries for "IsActive=1", but the other 20% on all rows, will the filtered index cause any danger of it being used when looking at all rows? Is there a "best practice" for doing things like creating two indexes, one filtered one not, so the optimizer has the best index to choose in each case?

Jack – yes, that’s a useful benefit too.

pmbAustin – Indexes shouldn’t change the results of a query. If an index doesn’t provide sufficient coverage of the table to satisfy a query, the SQL engine won’t pretend it does. A filter like IsActive=1 will only be used for queries that include an IsActive=1 predicate.

I agree with Andre – the light bulb just turned on! I haven’t seen it presented in such a manner that makes such sense. Thanks!

Great post, Rob.

I just feel the need to emphasize the needed SET options when working with filtered indexes, as these settings a mandatory for all connections hitting that table.

So not only at create time of the index !

( bol/technet weren’t clear on this : http://technet.microsoft.com/en-us/library/ms188783.aspx )

Been bit by it once ( on a production system after dev/QA worked fine but apparently not all apps had been tested ).

"UPDATE failed because the following SET options have incorrect settings: ‘QUOTED_IDENTIFIER’. "

This blog helped me diagnose and solve the issue: http://akashonly.blogspot.be/2012/01/filtered-index-and-quotedidentifier.html

Indeed! The same applies for ANSI_NULLS, which must be ON. There are actually a bunch of things that require those settings, and apparently one day SQL will refuse connections which don’t have those settings configured properly.

I can see lots of usage scenarios for this, thanks for the easy to follow example.

I didn’t get what you mean by this part "For the real scenario, that row gets picked up and removed quite quickly, returning the query to the ‘zero reads’ scenario."

In the real scenario where you are looking for order quantity > 1200, why would a row in the index be removed in the query? I think I am just reading that wrong, could you explain it simply?

Hi David,

This example was inspired by some tuning I did for a client a while back. The filter was looking for rows that met a particular condition, because things in that condition needed attention. Once they had been seen to, the condition wasn’t true any more, so the filtered index returned to being empty.

Another scenario could be to have a "Payment Pending" situation. Most people pay straight away, but occasionally you might have something which enters a special "needs attention" state for a short while.

Does that help?

Rob

Another great opportunity for filtered indexes is where you have large data skew. You can get the right query plan both ways (either scans/hashes for the skew(ed) values or seeks/nested loops for onesy-twosy values) with indexes that are a fraction of the normal index size.

Yes – I see a lot of potential around filtered indexes. They also help if you have a fixed inequality predicate and want the data ordered by something else. So much potential…

Great post, a very useful and smart algorithm…

Ian